CATEGORIES:

BiologyChemistryConstructionCultureEcologyEconomyElectronicsFinanceGeographyHistoryInformaticsLawMathematicsMechanicsMedicineOtherPedagogyPhilosophyPhysicsPolicyPsychologySociologySportTourism

What are the main characteristics of analog technology?

Basics of Informatics Systems

Study Questions

Fall Semester 2015

0. What is MOOC, what are the main MOOC sources?

Massive Open Online Courses: Free of charge university courses from the best universities. Which involves online lectures, online tests and online "diplomas".

Sources:

- Alison

- TEDx

- iTunes University

- Lynda

- Udacity

- Udemy

- etc..

1. Define the term informatics.

Informatics is the science of computer information systems. As an academic field it involves the practice of information processing, and the engineering of information systems.

Information is a “quantity” that can express the occurrence of an event or series of events (or the existence of one state or the other) through a series of elementary symbols.

2. What other fields of study are related to informatics?

a Computer engineering

is a discipline that combines elements of both electrical engineering and computer science

b Computer science

or computing science, is the study of the theoretical foundations of information and computation and their implementation and application in computer systems

c Cybernetics

is the study of communication and control, typically involving regulatory feedback, in living organisms, in machines and organizations and their combinations

d Information theory

is a discipline in applied mathematics involving the quantification of information

e Systems theory

is an interdisciplinary field that studies the properties of systems as a whole

f Telecommunications engineering

focuses on the transmission of information across a channel such as coax cable, optical fiber or free space.

3. What are the main characteristics of engineers?

- He/She always takes things apart to find out how they work...

- Also, has an insatiable desire to learn about new things...

- Never listens to someone that says something cannot be done

- He/She is typically bored to tears in a standard school..

Meaning, good grades are not really important...

Skills and abilities:

- Be a natural problem solver

- Understand the nature of materials

- Good core mathematics skills

- Have decent observational abilities

- Cost accounting skill

4. What are the main factors to be a good engineer?

EDUCATION:

Ability to Understand Multiple Disciplines, Technical Credibility

RESPONSIBILITIES:

Early Responsibility, Work in Several Technical Areas,

Ability to Produce a Product (On Time, In Budget)

ON-THE-JOB TRAINING:

Hands-on Hardware Experience, Knowledge of / Experience with System Simulations

ATTITUDES WITH PEOPLE:

Good Communicator and Listener, Can Communicate to all Management Levels, Patient, Curious, Honest, Friendly

MANAGEMENT SKILLS:

Ability to See "Big Picture„, Team Management Skills, Understanding of Program Management

ATTITUDES TOWARD WORK:

Learns Independently, Willing to Take Risks, Willing to Take Responsibility, Disciplined, Not Parochial, Pragmatic, "Can Do" Attitude, Adaptable

5. What is Moore’s Law?

is the observation that the number of transistors in a dense integrated circuit doubles approximately every two years.

The observation is named after Gordon E. Moore, the co-founder of Intel and Fairchild Semiconductor, whose 1965 paper described a doubling every year in the number of components per integrated circuit, and projected this rate of growth would continue for at least another decade. In 1975, looking forward to the next decade, he revised the forecast to doubling every two years.

The number of transistors on the microprocessors doubles every 18 months.

6. What is Gilder’s Law?

An assertion by George Gilder, visionary author of Telecosm, which states that "bandwidth grows at least three times faster than computer power." This means that if computer power doubles every eighteen months (per Moore's Law), then communications power doubles every six months.

The bandwidth of communication networks triples every year.

7. What is Metcalf’s Law?

Metcalfe's law states that the value of a telecommunications network is proportional to the square of the number of connected users of the system (n^2).

The value of a network is proportional to the square of the number of nodes.

8. What is Shugart’s Law? (Alen Shugart)

The price of stored bits is halved every 18 months.

9. What is Rutgers’ Law?

The storage capacity of computers doubles every year.

10. Define the following basic concepts of computer science: hardware, software, firmware, netware (groupware, courseware, freeware, shareware), open source software.

- hardware is the collection of physical elements that constitutes a computer system. Computer hardware is the physical parts or components of a computer

- software is any set of machine-readable instructions that directs a computer's processor to perform specific operations.

- firmwareis a type of software that provides control, monitoring and data manipulation of engineered products and systems. Typical examples of devices containing firmware are embedded systems (such as traffic lights, consumer appliances, and digital watches), computers, computer peripherals, mobile phones, and digital cameras. The firmware contained in these devices provides the low-level control program for the device.

- groupware is an application software designed to help people involved in a common task to achieve their goals.

- coursewareis a software, the primary purpose of which is teaching or self-learning.

- freeware is computer software that is available for use at no monetary cost, which may have restrictions such as rested functionality, restricted time period of usage or redistribution prohibited, and for which source code is not available.

- shareware is a type of proprietary software which is provided (initially) free of charge to users, who are allowed and encouraged to make and share copies of the program, which helps to distribute it.

- Open-source software (OSS) is computer software with its source code made available with a license in which the copyright holder provides the rights to study, change, and distribute the software to anyone and for any purpose.

11. What are the characteristics of analog technology?

The method of data representation: Mapping physical values (variables) to other physical values

The process of problem solving: Analogy, model

Analogy is the process of representing information about a particular subject (the analogue or source system) by another particular subject (the target system).

Modell: Both system can be described with the same differential equation

Accuracy: Limited: 0.01-0.001%

Implementation: Constructed (Operational) amplifiers

The process of computation: Parallel

The method of programming: Connections using wires (training)

12. What are the main components of analog computers?

-Precision resistors and capacitors

-operational amplifiers

-Multipliers

-potentiometers

-fixed-function generators

What are the main characteristics of analog technology?

Advantages: vividly descriptive, fast due to parallel functioning, efficiency, (usually) has less distortion.

Disadvantages: limited accuracy, noise sensitiveness, mistakes add up during usage.

14. What factors contributed to the widespread use of analog technology?

1. Our world is analog, so it would be obvious to use the same paradigm.

2. Easier to build

3. Requires less technological development level

15. What are the main characteristics of digital technology?

The method of data representation:

Each physical value represented by (binary) numbers.

The process of problem solving:

Works with Algorithms

Accuracy:

Depends (on the length) of the representation

Implementation:

Logical (digital) circuits

The process of computation:

Serial??

The method of programming:

Algorithms based on instructions

16. What factors contributed to the widespread use of digital technology?

Currently digital technology is able to achieve bigger component density, is easier to program, plan and to make algorithms than the analog.

17. Define the following terms: signal, signal parameter, message, data character?

Signal: A value or a fluctuating physical quantity, such as voltage, current, or electric field strength, whose variations represent coded information.

Signal parameter: The feature, or a feature change of the signal, which represents the information.

Message: A signal made for forwarding.

Data: A signal made for processing.

18. What are the main characteristics of analog and digital signals and how can they be categorized?

Analog signal: any variable signal is continuous both in time and amplitude.

Digital signal: the signal is both discrete and quantized.

19. How can analog signals be converted to digital signals (A/D)?

The A/D converter converts a voltage variable (the samples of the previously sampled analog signal) to numbers. It is a time-consuming operation. As the conversion of the actual sample must be finished before the arrival of the next sample, usually the time of the analog-digital conversion determines the limit of the speed of sampling. Properties: frequency, resolution.

20. Describe the common types of A/D conversion.

Clock-counter type converter

Successive approximation

Flash converter

21. What is the principle behind the conversion of digital signals to analog signals (D/A)?

It converts a number to voltage. The conversion is practically instantaneous. Its typical parameters are the number of bits and the output voltage range. In case of 0s input the voltage range is the minimum on the output, while the 1 input results in the maximum of the voltage range on the output.

22. What is the Shannon – Nyquist sampling principle?

The sampling rate (frequency) must be bigger than the double of the maximal frequency component.

fquantation > 2 fmax

23. During the A/D conversion process, what factors determine the resolution?

Sampling rate (frequency) and sample resolution (binary word length).

24. What is the theory and application of oversampling?

The voltage values of the digitally processed signals are scanned by the multiple of the sampling frequency, thus the remainder signals show up also on the multiple of the frequency, so the aliasing filter does not have to interfere so drastically.

25. What is the one-bit D/A principle?

In case the value of the signal is 0 then lower, in case of 1, higher frequency goes on the circuit.

26. What are the main characteristics of A/D and D/A converters?

A/D: resolution, dynamics (ratio of the smallest and biggest value D=10*lg10 2n), resolution (quantation) error (max: 1/2n), conversion speed, linearity, offset error.

D/A: principle of operation (weighted, ladder, etc); one/two/four bit D/A converter.

27. How can integer numbers be written in binary form?

Calculated in the binary system on n bit.

The biggest number representable = 2n-1.

If the MSB is the sign: 0=positive, 1=negative, then the biggest number representable=(2n-1-1). The biggest negative number representable=2n-1.

28. What is the essence and significance of binary complement representation (examples)?

With this representation we can subtract 2 numbers with addiction. We have to put the subtrahend in 2nd complementary code and add to the minuend. The result will be that number without the overflown bit.

29. What is the essence and significance of octal and hexadecimal number representation?

Octal and hex use the human advantage that they can work with lots of symbols while it is still easily convertible back and forth between binary, because every hex digit represents 4 binary digits (16=2^4) and every octal digit represents 3 (8=2^3). I think hex wins over octal because it can easily be used to represent bytes and 16/32/64-bit numbers.b

30. What are ASCII and UNI codes?

ASCII: American Standard Code for Information Interchange

Unicode: the international standard containing the uniform coding of the different character systems.

Both are character coding standards.

The ASCII is one of the oldest standards, fundamentally it contains the English alphabet and the most common characters.

31. Who were the pioneers of computer technology in Hungary and what lasting achievements are they known for?

John von Neumann

Farkas Kempelen

Tivadar Puskás

Tihamér Nemes: cybernetics

Dániel Muska: robotics

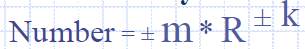

32. What are the main components in binary real number representation?

R: the base of the numeric system

m: mantissa

k:exponent (characteristic)

33. How do you write the decimal number 7.75 as a binary real number?

1: convert the integer part to binary:

7=111

2: convert the friction part to binary:

075

150

100

4. combine and normalize the number

111.11

if the rage is .5-1 then: 0.11111

if the rage is 1-2 then 1.1111

5. now I’m going to use the .5-1 rage. In that case the mantissa is 11111 and the characteristic is +3. The base is of course 2.

34. What is the absolute, binary complement, and biased representation of exponents? (For example, how do you represent the 7.75 decimal number in two bytes if the mantissa is represented by the lower byte, the sign of the number is represented by

the MSB, and the exponent is represented the following 7 bits of the higher byte using the 63 biased form? The MSB of the mantissa is not represented, it is implied.)

The exponent is 3 in decimal if we use the 0.5-1 rage, so if we convert to 8 bits:

00000011, this is the absolute valued form. Would be 10000011 if the number is negative.

The binary complement is the same as the first because it’s positive. It would be

11111101 if the number is negative (-3).

And in bias we have to use 63 as zero and add the 3 to it -> 66, which is

01000011. If it would be -3, then we would have to use 60.

35. What is the essence of the "little-endian" and "big-endian" number representation?

(Put the AFC5 hexadecimal number into both forms.)

It has no essence it’s just depends on the manufacturer. E.g.: Intel=big endian, Motorola= Little endian.

Big endian: we put the most significantbyteto the smallest address:

AF

C5

Little e.: we put the least significant byteto the smallest address.

C5

AF

36. What are the everyday and scientific definitions of the basic concepts of information?

Everyday definitions:

news, notice, data, message, knwoledge, announcement, statement, coverage, instruction etc.

Scientific definition:

Quantity that can express through a series of elementary symbols the occurence of an event or series of events (or the existence of one state at the other).

37. Who were the pioneers in information theory?

R. Fischer, R. Hartley

38. What is the Hartley formula (for measuring the quantity of information) and what is the prerequisite for its use? For example, how many bits are required to represent two cards that have been selected out of a 32-card deck of Hungarian playing cards?

H = k * log n

H: quantity of information when a message is chosen

k: number of characters in the message

n: number of characters of the alphabet int he message

So the prev. example: H= 2*log32 =>10. The answer is 10 bits.

39. What is the Shannon-Wiener formula (for measuring the quantity of information) and what is the prerequisite for its use? For example, how many bits are required to represent one character in a message if the frequency of the characters is the following: A(8), B(4), C(2), D(2)

A= - (8/16)*logˇ2(8/16) ß maaaybe, I don’t know!!

40. What are the units of measure of information?

H = k * log10n (Hartley)

H = k * log2n (Shannon, bit)

H = k * logen (Nat)

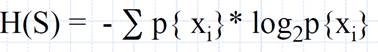

41. What is the connection between information and entropy?

We are using the same formula for the physical entropy (in physics) and the information (in informatics), but of course the base is different.

42. What are the main characteristics of the information (entropy) function?

If the probability of an element is zero or 100 per cent we have to ask less. Therefore, if the probability is more random, we have to ask the most. So the function on then two ends will approach the x axis (the 0 questions).

43. Define relative entropy and redundance and provide examples.

The relative entropy is the relation of the maximal and actual, as well as the redundancy (1 – relative entropy) * 100%, and it gives the percentage for which the change of the information is still non-existent.

Let a discrete distribution have probability function  , and let a second discrete distribution have probability function

, and let a second discrete distribution have probability function  . Then the relative entropy of

. Then the relative entropy of  with respect to

with respect to  , also called the Kullback-Leibler distance, is defined by

, also called the Kullback-Leibler distance, is defined by

|

----------------------------------------------------------------------------------------

44. What is the block diagram for the coding and decoding process?

45. What is the main difference between source coding and channel coding?

The transformation of U to A with minimal redundancy “Source coding”

The transformation of U to A with increased redundancy “Channel coding”

46. What do we mean by non-reducible code?

We cannot compress it further more without losing data.

47. What is the definition, advantage and disadvantage of statistics-based data compression?

Advantage: ensures the minimal average code length (minimum redundancy) Disadvantage: We need to transmit the statistics to the receiver site, thus we need to send more data.

48. What is the objective of minimum redundancy code?

Knowing the frequency (or the probability) of the symbols in the message we can generate a code which

-can be decoded unabiguously

-variable (word) length

-the length of the code words depends on the requency of the symbols in the message. (freqent symbol->shorter code, real symbols->long code)

49. Do classic minimum redundancy codes result in loss of information?

Nope, because it can be decoded unambiguously.

50. What is the objective of the Shannon-Fano algorithm and how does it work (and explain the block diagram)?

(So the objective of the algorithm is to compress the data ie. using less bits to store a character.)

51. What is the objective of the Huffman algorithm and how does it work (explain the block diagram)?

52. Using Shannon-Fano coding, how do we write the code for a message containing BC characters, given the following character set and frequency: A(16), B(8), C(7), D (6), E (5)

53. Using the same characters, apply the Huffman code.

54. What is the principle behind arithmetic coding? (For example, how do you arrive at the arithmetic code of XZ, given the following character set and frequency: X(5) Y(3), Z(2)

(I am not sure yet)

55. How do you decode the arithmetic code?

(I am not sure yet)

56. What is the data compression principle used in CCITT 3- type fax machines?

The Huffman Encoding.

57. For what data types is it possible/recommended to use data compression?

58. What is the principle behind, advantage and disadvantage of dictionary-based data compression?

We have a dictionary and instead of sending the word we just send the location of that word in the dictionary and the decoder just read the word from the dictionary.

The advantage is that we don’t have to send as many data as if we would send the whole word.

The disadvantage is that both the receiver and the source must have the same dictionary.

59. What is LZ77 coding?

Is a dictionary based data compression method. “A Universal Algorithm for Sequntial Data Compression”

1977 Abraham Lempel and Jakob Ziv

60. What are the first 5 characters in an LZ77 code if the dictionary window contains the series ABCDECDH and the window to be coded is SXHORTABCDEFGH? (6,5,C)

61. What are the limitations of LZ77 coding?

1. It can only search in the “dictionary”

2. The length of the information to be coded is limited

62. What is the principle behind adaptive data compression coding (explain the block diagram)?

Basic principle: based on the source information it builds the code step by step, becoming increasingly efficient. (Instead of sending the statistics the decoder builds up the same statistics at the receiver side)

63. What is the principle behind LZ78 coding?

-It builds the code step by step

-The dictionary registers all of the strings the first time they occur int the message and assigns a row number to each string. This row number is used as a short substitute in the outgoing message when a longer string occurs often.

-This is an adaptive and dictionary based coding.

64. What is the compressed code of the following series of characters using LZ78 coding: XYZXYZXYZXYZXYZXYZXYZXYZXYZXYZ

solution: 0X0Y0Z1Y3X2Z4Z7X6X9Y5Y11Z

65. What is the principle behind LZW coding (what is the block diagram)?

66. What are the advantages and disadvantages of using greater than minimum redundancy?

The advantage is that we can correct errors (we can restore the information after an error during the transmission). The disadvantage is that we need to store (and send) more bits.

67. How can we categorize the errors?

1. Hard errors (permanent)

2. Soft errors (volatile)

a.“traditional soft errors

i.temperature related errors

ii.system noise related errors

iii.pattern sensibility

b. Radiation related errors

68. What is the frequency of different types of errors?

2.b. occurs more often than 2.a. and 2. occurs more often than 1

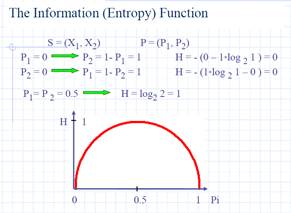

69. How can we determine the frequency of errors and the MTBF?

λ= error/ 10^6 hours

MTBF: mean time between failures: it’s just the average time between the errors.

MTBF= 1/λ

70. What is the typical „bathtub” curve for errors? Describe it.

In a nutshell: everything in an early stage has relatively huge number of errors.And when it became older and older the errors increases too. Between these 2 stages it has the “useful lifespan”.

71. What is the purpose of parity and CRC code?

The purpose of the parity and the CRC is the error detection.

72. What is the even and odd parity of the following binary sequence: 11101010 ?

11101010 1 even parity

11101010 0 odd parity

73. What is the principle of error detection and correction (what is the block diagram)?

Calculating the parity, sending the data and the parity, and calculating the parity on the receiver side and compare. If it not is the same, then an error occurred.

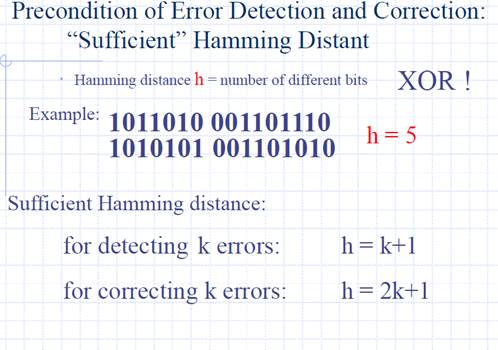

74. What is the definition of Hamming distance?

The Hamming distance it the number of different bits after a comparison. (We are using XOR operation).

75. What is the definition of the Arrhenius formula and its main applications?

We use it to test the device for errors on a higher temperature (where errors occur more) and we can calculate is back to room temperature.

76. What does the word burning mean in the manufacturing of electronic devices?

We use that word when we test a device for errors in a high temperature.

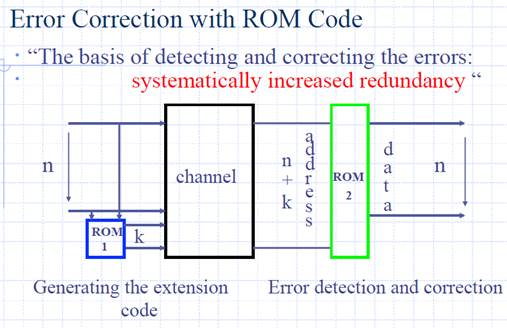

77. What is the Hamming error correction code and the principle behind automatic error correction (what is the block diagram if we determine the supplementary code using combination network)?

78. How can we arrive at the error correction code using only read only memory (ROM)?

79. How can an error be detected and corrected if the input of the information channel is the following 4 bits: 1011 and any of these bits may be lost in the channel?

80. What are the advantages and disadvantages of fault tolerant systems?

The advantage is that I will not stop working due to an error. The disadvantage is that we will not be notified if an error occurs, therefore we would think of a faulty bit as a completely intact one.

81. What are the main types, application and period of use of historic computing devices?

- Reckoning with fingers

- Abacuses

- Mechanical calculating machines

- Electronic calculation (computing) machines

82. What is the principle behind counting on fingers (in Europe, China, in ancient times and today)?

83. What are the main types of abacuses (Russian (schoti), Chinese (suan-pan), Japanese (soroban)?

84. How does a slide rule work?

85. Who made the first mechanical counting devices?

• First adding machine (using gears)

Blaise Pascal (1623 – 1662)

• First multiplying machine

Gottfred Wilhelm Leibniz (1646-1716)

(• First Mass-produced Calculating Machine “Thomas Arithmometer”

M. Charles Xavier Thomas de Colmar)

86. What is the major difference between a calculator and a computer?

The computer has memory and it holds the data and programs in it, while a calculator isn’t.

87. What are the most significant active components in the history of electronics?

- Electron tube (~1900)

- Transistor (1948)

- Integrated circuit (1958)

- Microprocessor (1971)

88. Who were the major figures in the development of the electronic tube, the transistor, the integrated circuit and the microprocessor?

Vacuum tube:

Thomas Alva Edison (1883): incandescent cathode

Philip Lenard (1903)

A. R. Wehnelt (1904)

Transistor:

John Bardeen, Walter Brattain, William Schockh 1948, Bell Lab

ICs:

Jack St. Clair Kilby 1958 (1st germanium ic)

Robert Noice 1959 (1st silicium ic)

Microprocessors:

Ted Hoff 1971 (Intel 4004)

89. What are the different computer generations?

- First generation

- Second generation

- Third generation

- Fourth generation

- Fifth generation (not yet developed)

90. What are the characteristics of first-generation computers?

- Vacuum-tube electronics

- Processor-centric organization

- Performance: 10^3-10^4 instructions/sec

- Large size

- Huge power consumption

- High price

- Small number of system manufactured

- Operative memory: delay line or storage (Williams) tube

- Machine level programming

91. What are the characteristics of second-generation computers?

- Semiconductor, transistor electronic

- Memory-centric (ferrit core operative momory)

- Performance: 10^4-10^5 inst./sec

- Significantly reduced size and energy consumption

- The emergence of computer families

- Channel-based input-output system

- High level programming languages

- The introduction of operating systems

- The spread of data processing, flow control applications

- Batch processing

92. What are the characteristics of third-generation computers?

- Integrated circuit-based electronics

- Semiconductor operative memory

- Performance: 10^6-10^7 inst./sec

- Modular structure

- The introduction of multiprogramming and time-shared operation

- Good reliability (MTBF)

- Small size

93. What are the characteristics of fourth-generation computers?

- LSI, VLSI/based technology

- Multiprocessor organization

o Small Scale Integrated Circuit

o Large Scale integrated Circuit

o Very Large Scale Integrated Circuit

- The increased significance of the software

- The emergence and widespread use of computer networks

94. What were the goals of the fifth-generation computer development program?

- Specialized, knowledge based operation

- Parallel operation

- Logic programming language (Prolog)

- Humanized user interface (input-output)

o speech understanding

o voice output

o handwriting recognition

- Separate problem solving module

- Main program phases:

o 3 years: knowledge gathering

o 4 years: prototype development

o 3 years: preparation for mass production

95. Why were these goals not reached?

96. What do we mean today by modern personal computers and what are the main characteristics (processors, storage, bandwidth, peripheral devices...)?

97. Who were the pioneers of computer technology in Hungary and what innovations were of prime importance in the history of informatics?

-Farkas Kempelen 1734-1804

Talking machine (1791), Chess machine (1769), typing a printing machine for the blind

-Tivadar Puskás (1844-1893) -telecommunication

Telephone center (1878-)

Telephone Newsletter (1893)

-Tihamér Nemes (1895-1960) -cybernetics

Logical machine, Walking machine, Chess machine, Patens for color tv

-László Kalmár (1905-1976)

Logical machine, formula controlled machine, ladybug from Szeged

-László Kozma (1902-1983)

Decimal automatic calculator, MESz 1, the 1st Hungarian computer

-Rezső Tarján (1908-1978) –circuit design

B1 (Budapest 1) design based on the ENIAC

M3 (Moscow 3) the first Hungarian M3 computer based on the Russian M3

-Dennis Gábor (1900-1979)

Holograpgy

Error-resistive information storage

Research areas:

“Cryotechnics”

Electronics communication theory

Tv development

High speed oscilloscope

-John von Neumann (1903-1953)

Research areas:

Game theory

Ring theory

Theory of almost-periodic signals

The demonstration of the identity of the Heisenberg matrix mechanics and the Schrödinger wave mechanics

Theory of computers

98. Who was John von Neumann and what were his main accomplishments?

A Hungarian chemist, mathematician. He made the First Draft of the report on the EDVAC. He designed the Neumann architecture.

99. What was John von Neumann’s role in the development of computer technology?

100.What were the main conclusions of the EDVAC report?

Dr. László Kutor

Date: 2015-12-11; view: 3640

| <== previous page | | | next page ==> |

| If they had never met Sheldon Cooper | | | E-MAIL ABBREVIATIONS |