CATEGORIES:

BiologyChemistryConstructionCultureEcologyEconomyElectronicsFinanceGeographyHistoryInformaticsLawMathematicsMechanicsMedicineOtherPedagogyPhilosophyPhysicsPolicyPsychologySociologySportTourism

Nbsp; Exception-Handling Performance Considerations

The developer community actively debates the performance of exception handling. Some people claim that exception handling performance is so bad that they refuse to even use exception handling. However, I contend that in an object-oriented platform, exception handling is not an option; it is man- datory. And besides, if you didnít use it, what would you use instead? Would you have your methods return true/false to indicate success/failure or perhaps some error code enum type? Well, if you did this, then you have the worst of both worlds: the CLR and the class library code will throw exceptions and your code will return error codes. Youíd have to now deal with both of these in your code.

Itís difficult to compare performance between exception handling and the more conventional means of reporting exceptions (such as HRESULTs, special return codes, and so forth). If you write code to check the return value of every method call and filter the return value up to your own callers, your applicationís performance will be seriously affected. But performance aside, the amount of ad- ditional coding you must do and the potential for mistakes is incredibly high when you write code to check the return value of every method. Exception handling is a much better alternative.

However, exception handling has a price: unmanaged C++ compilers must generate code to track which objects have been constructed successfully. The compiler must also generate code that, when an exception is caught, calls the destructor of each successfully constructed object. Itís great that

the compiler takes on this burden, but it generates a lot of bookkeeping code in your application, adversely affecting code size and execution time.

On the other hand, managed compilers have it much easier because managed objects are al- located in the managed heap, which is monitored by the garbage collector. If an object is success- fully constructed and an exception is thrown, the garbage collector will eventually free the objectís memory. Compilers donít need to emit any bookkeeping code to track which objects are constructed successfully and donít need to ensure that a destructor has been called. Compared to unmanaged C++, this means that less code is generated by the compiler, and less code has to execute at run time, resulting in better performance for your application.

Over the years, Iíve used exception handling in different programming languages, different op- erating systems, and different CPU architectures. In each case, exception handling is implemented differently with each implementation having its pros and cons with respect to performance. Some implementations compile exception handling constructs directly into a method, whereas other implementations store information related to exception handling in a data table associated with the methodóthis table is accessed only if an exception is thrown. Some compilers canít inline methods that contain exception handlers, and some compilers wonít enregister variables if the method con- tains exception handlers.

The point is that you canít determine how much additional overhead is added to an application when using exception handling. In the managed world, itís even more difficult to tell because your as- semblyís code can run on any platform that supports the .NET Framework. So the code produced by the JIT compiler to manage exception handling when your assembly is running on an x86 machine will be very different from the code produced by the JIT compiler when your code is running on an x64

or ARM processor. Also, JIT compilers associated with other CLR implementations (such as Microsoftís

.NET Compact Framework or the open-source Mono project) are likely to produce different code.

Actually, Iíve been able to test some of my own code with a few different JIT compilers that Microsoft has internally, and the difference in performance that Iíve observed has been quite dramatic and surprising. The point is that you must test your code on the various platforms that you expect your users to run on, and make changes accordingly. Again, I wouldnít worry about the performance of using exception handling; the benefits typically far outweigh any negative perfor- mance impact.

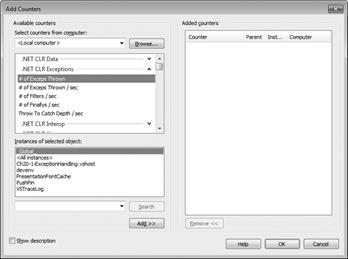

If youíre interested in seeing how exception handling impacts the performance of your code, you can use the Performance Monitor tool that comes with Windows. The screen in Figure 20-8 shows the exception-related counters that are installed along with the .NET Framework.

Occasionally, you come across a method that you call frequently that has a high failure rate. In this situation, the performance hit of having exceptions thrown can be intolerable. For example, Microsoft heard back from several customers who were calling Int32ís Parse method, frequently passing in data entered from an end user that could not be parsed. Because Parse was called frequently, the performance hit of throwing and catching the exceptions was taking a large toll on the applicationís overall performance.

|

FIGURE 20-8Performance Monitor showing the .NET CLR Exceptions counters.

To address customersí concerns and to satisfy all the guidelines described in this chapter, Microsoft added a new method to the Int32 class. This new method is called TryParse, and it has two overloads that look like the following.

public static Boolean TryParse(String s, out Int32 result); public static Boolean TryParse(String s, NumberStyles styles,

IFormatProvider, provider, out Int32 result);

Youíll notice that these methods return a Boolean that indicates whether the String passed in contains characters that can be parsed into an Int32. These methods also return an output param- eter named result. If the methods return true, result will contain the result of parsing the string into a 32-bit integer. If the methods return false, result will contain 0, but you really shouldnít execute any code that looks at it anyway.

One thing I want to make absolutely clear: A TryXxx methodís Boolean return value returns false to indicate one and only one type of failure. The method should still throw exceptions for any other type of failure. For example, Int32ís TryParse throws an ArgumentException if the styleís argument is not valid, and it is certainly still possible to have an OutOfMemoryException thrown when calling TryParse.

I also want to make it clear that object-oriented programming allows programmers to be produc- tive. One way that it does this is by not exposing error codes in a typeís members. In other words, constructors, methods, properties, etc. are all defined with the idea that calling them wonít fail. And, if defined correctly, for most uses of a member, it will not fail, and there will be no performance hit because an exception will not be thrown.

When defining types and their members, you should define the members so that it is unlikely that they will fail for the common scenarios in which you expect your types to be used. If you later hear from users that they are dissatisfied with the performance due to exceptions being thrown, then and only then should you consider adding TryXxx methods. In other words, you should produce the best object model first and then, if users push back, add some TryXxx methods to your type so that the users who experience performance trouble can benefit. Users who are not experiencing performance trouble should continue to use the non-TryXxx versions of the methods because this is the better object model.

Constrained Execution Regions (CERs)

Many applications donít need to be robust and recover from any and all kinds of failures. This is true of many client applications like Notepad.exe and Calc.exe. And, of course, many of us have seen Microsoft Office applications like WinWord.exe, Excel.exe, and Outlook.exe terminate due to unhandled exceptions. Also, many server-side applications, like web servers, are stateless and are automatically restarted if they fail due to an unhandled exception. Of course some servers, like SQL Server, are all about state management and having data lost due to an unhandled exception is potentially much more disastrous.

In the CLR, we have AppDomains (discussed in Chapter 22), which contain state. When an App- Domain is unloaded, all its state is unloaded. And so, if a thread in an AppDomain experiences an unhandled exception, it is OK to unload the AppDomain (which destroys all its state) without termi- nating the whole process.8

|

8 This is definitely true if the thread lives its whole life inside a single AppDomain (like in the ASP.NET and managed SQL Server stored procedure scenarios). But you might have to terminate the whole process if a thread crosses AppDomain boundaries during its lifetime.

By definition, a CER is a block of code that must be resilient to failure. Because AppDomains can be unloaded, destroying their state, CERs are typically used to manipulate any state that is shared by multiple AppDomains or processes. CERs are useful when trying to maintain state in the face of ex- ceptions that get thrown unexpectedly. Sometimes we refer to these kinds of exceptions as asynchro- nous exceptions. For example, when calling a method, the CLR has to load an assembly, create a type object in the AppDomainís loader heap, call the typeís static constructor, JIT IL into native code, and so on. Any of these operations could fail, and the CLR reports the failure by throwing an exception.

If any of these operations fail within a catch or finally block, then your error recovery or clean- up code wonít execute in its entirety. Here is an example of code that exhibits the potential problem.

private static void Demo1() { try {

Console.WriteLine("In try");

}

finally {

// Type1ís static constructor is implicitly called in here Type1.M();

}

}

private sealed class Type1 { static Type1() {

// if this throws an exception, M wonít get called Console.WriteLine("Type1's static ctor called");

}

public static void M() { }

}

When I run the preceding code, I get the following output.

In try

Type1's static ctor called

What we want is to not even start executing the code in the preceding try block unless we know that the code in the associated catch and finally blocks is guaranteed (or as close as we can get to guaranteed) to execute. We can accomplish this by modifying the code as follows.

private static void Demo2() {

// Force the code in the finally to be eagerly prepared RuntimeHelpers.PrepareConstrainedRegions(); // System.Runtime.CompilerServices namespace try {

Console.WriteLine("In try");

}

finally {

// Type2ís static constructor is implicitly called in here Type2.M();

}

}

public class Type2 { static Type2() {

Console.WriteLine("Type2's static ctor called");

}

// Use this attribute defined in the System.Runtime.ConstrainedExecution namespace [ReliabilityContract(Consistency.WillNotCorruptState, Cer.Success)]

public static void M() { }

}

Now, when I run this version of the code, I get the following output.

Type2's static ctor called In try

The PrepareConstrainedRegions method is a very special method. When the JIT compiler sees this method being called immediately before a try block, it will eagerly compile the code in the tryís catch and finally blocks. The JIT compiler will load any assemblies, create any type objects, invoke any static constructors, and JIT any methods. If any of these operations result in an exception, then the exception occurs before the thread enters the try block.

When the JIT compiler eagerly prepares methods, it also walks the entire call graph eagerly prepar- ing called methods. However, the JIT compiler only prepares methods that have the Reliability≠ ContractAttribute applied to them with either Consistency.WillNotCorruptState or Consis≠ tency.MayCorruptInstance because the CLR canít make any guarantees about methods that might corrupt AppDomain or process state. Inside a catch or finally block that you are protecting with

a call to PrepareConstrainedRegions, you want to make sure that you only call methods with the

ReliabillityContractAttribute set as Iíve just described.

The ReliabilityContractAttribute looks like this.

public sealed class ReliabilityContractAttribute : Attribute {

public ReliabilityContractAttribute(Consistency consistencyGuarantee, Cer cer); public Cer Cer { get; }

public Consistency ConsistencyGuarantee { get; }

}

This attribute lets a developer document the reliability contract of a particular method to the methodís potential callers. Both the Cer and Consistency types are enumerated types defined as follows.9

enum Consistency {

MayCorruptProcess, MayCorruptAppDomain, MayCorruptInstance, WillNotCorruptState

}

enum Cer { None, MayFail, Success }

If the method you are writing promises not to corrupt any state, use Consistency.WillNot≠ CorruptState. Otherwise, document what your method does by using one of the other three pos- sible values that match whatever state your method might corrupt. If the method that you are writing

|

9 You can also apply this attribute to an interface, a constructor, a structure, a class, or an assembly to affect the mem- bers inside it.

promises not to fail, use Cer.Success. Otherwise, use Cer.MayFail. Any method that does not have the ReliabiiltyContractAttribute applied to it is equivalent to being marked like this.

[ReliabilityContract(Consistency.MayCorruptProcess, Cer.None)]

The Cer.None value indicates that the method makes no CER guarantees. In other words, it wasnít written with CERs in mind; therefore, it may fail and it may or may not report that it failed. Remember that most of these settings are giving a method a way to document what it offers to potential callers so that they know what to expect. The CLR and JIT compiler do not use this information.

When you want to write a reliable method, make it small and constrain what it does. Make sure that it doesnít allocate any objects (no boxing, for example), donít call any virtual methods or inter- face methods, use any delegates, or use reflection because the JIT compiler canít tell what method will actually be called. However, you can manually prepare these methods by calling one of these methods defined by the RuntimeHelpersís class.

public static void PrepareMethod(RuntimeMethodHandle method) public static void PrepareMethod(RuntimeMethodHandle method,

RuntimeTypeHandle[] instantiation)

public static void PrepareDelegate(Delegate d);

public static void PrepareContractedDelegate(Delegate d);

Note that the compiler and the CLR do nothing to verify that youíve written your method to actu- ally live up to the guarantees you document via the ReliabiltyContractAttribute. If you do something wrong, then state corruption is possible.

| |||

| |||

You should also look at RuntimeHelperís ExecuteCodeWithGuaranteedCleanup method, which is another way to execute code with guaranteed cleanup.

public static void ExecuteCodeWithGuaranteedCleanup(TryCode code, CleanupCode backoutCode, Object userData);

When calling this method, you pass the body of the try and finally block as callback methods whose prototypes match these two delegates respectively.

public delegate void TryCode(Object userData);

public delegate void CleanupCode(Object userData, Boolean exceptionThrown);

And finally, another way to get guaranteed code execution is to use the CriticalFinalizer≠ Object class, which is explained in great detail in Chapter 21.

Date: 2016-03-03; view: 671

| <== previous page | | | next page ==> |

| Nbsp; Debugging Exceptions | | | Nbsp; Code Contracts |